Juice Sucking Servers, part deux

A follow up post where the measurements are done with a patched version of wrk appears in part trois This also has a better memory overview graph.

Introduction

After my previous blog post a lot of friendly people on the swift forums helped me understand and circumvent the weird behaviour of swift as compared to the other languages. There were several problems at bay:

- I was using a not well-maintained swift BigInt library, where the other BigInt libraries were optimised.

- Vapor 3.92 was slow in accepting the first request. This would lead to a timeout of > 2 seconds for the first request, even though the latter requests were handled more swiftly. This has been fixed in Vapor 3.96

- I was logging to the console with info level instead of debug.

In this post I have updated the graphs after benchmarking with the community enhancements.

Vapor 3.92 vs 3.96

At first, a suggestion by Johannes Weiss from the SwiftNIO team. Vapor starts slow because it only accepts 4 connection by default. It starts working on these tasks, before accepting new requests.

So why is the first request slow?

SwiftNIO's default setting is to only accept 4 connections in

a burst (even if there are 100 new connections, it'll just

accept 4 each EventLoop tick). So in a way it prioritises

existing connections over new connections under high load.

Vapor was quickly patched to increase this number to 256 and make it configurable by Gwynne Raskind.

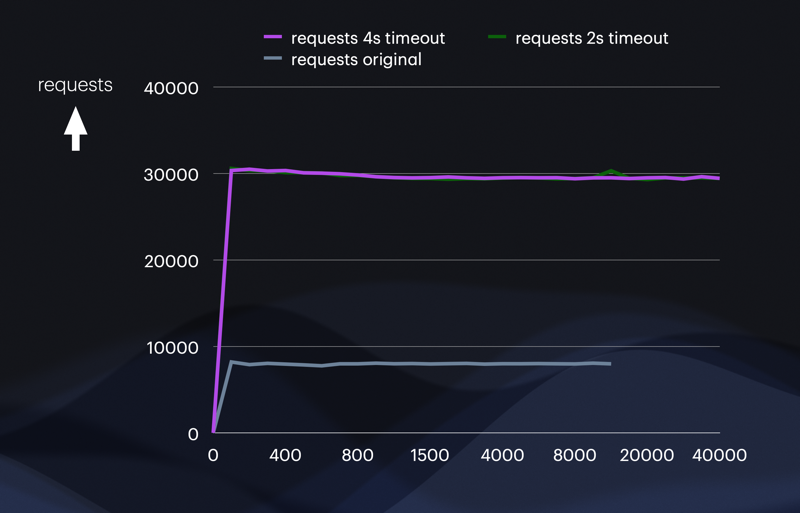

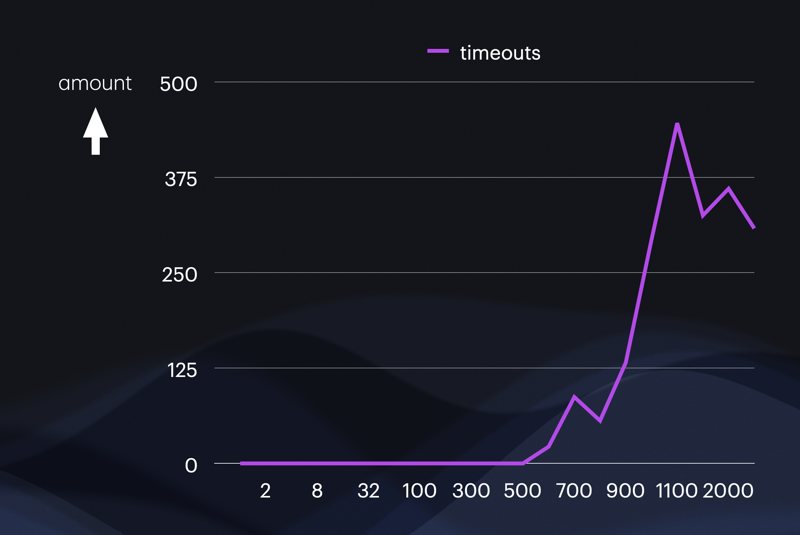

You see that the immediate timeouts do not occur anymore, only around 300 requests. We will increase this number by implementing a faster algorithm.

Numberick & Vapor 3.96 vs BigInt

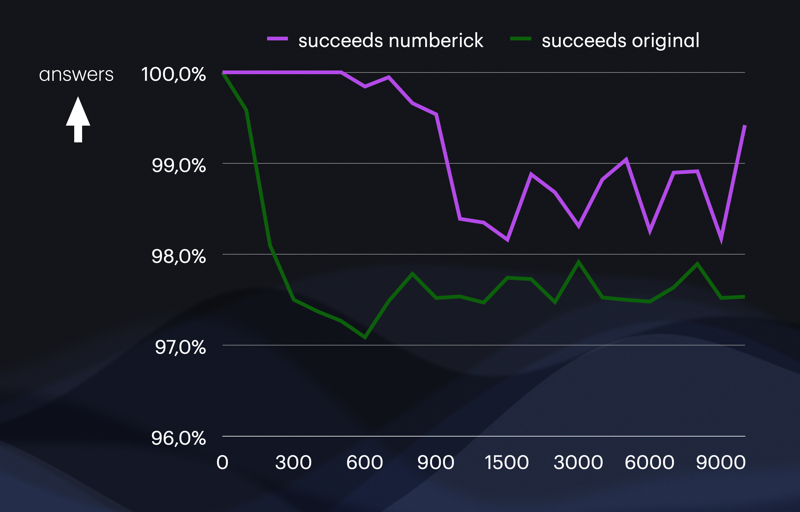

Wade Tregaskis from the swift forums did some optimising of the code. He swapped out the venerable BigInt package for the nimbler Numberick package. Also he changed the ‘info’ logging to ‘error’. The speedup is tremendous and many more requests can be served.

The grey line is the original benchmark with the BigInt package.

Another improvement by having a more optimised Fibonacci algorithm I did not apply. This would be another 5 times speed-up. In my opinion that would be cheating compared to the other benchmarks.

You can see that, combined with the Vapor 3.96 update, the timeouts at the beginning do not occur anymore.

Succeeds are again categorised by returning an answer within the timeout period of 2 seconds. But what happens if we increase this timeout period?

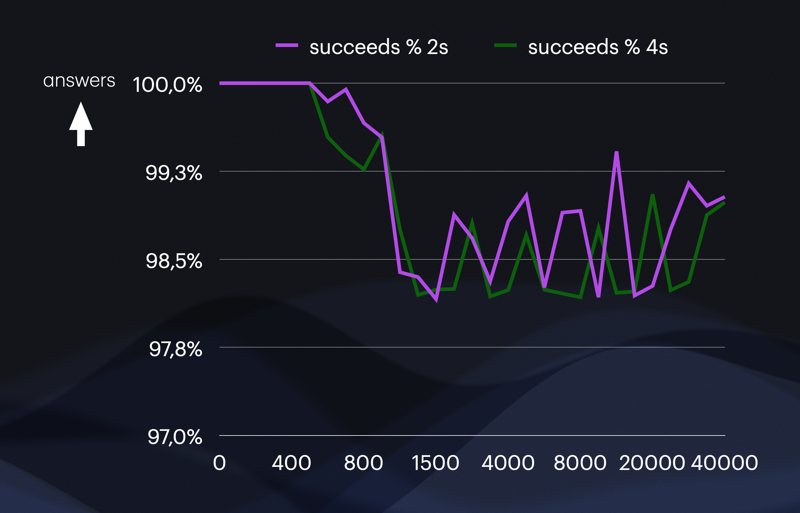

timeout of 4 instead of 2 seconds for wrk

I increased the wrk default timeout from 2 to 4 seconds. This did not make any significant difference, as predicted by Jonathan.

Linear latency

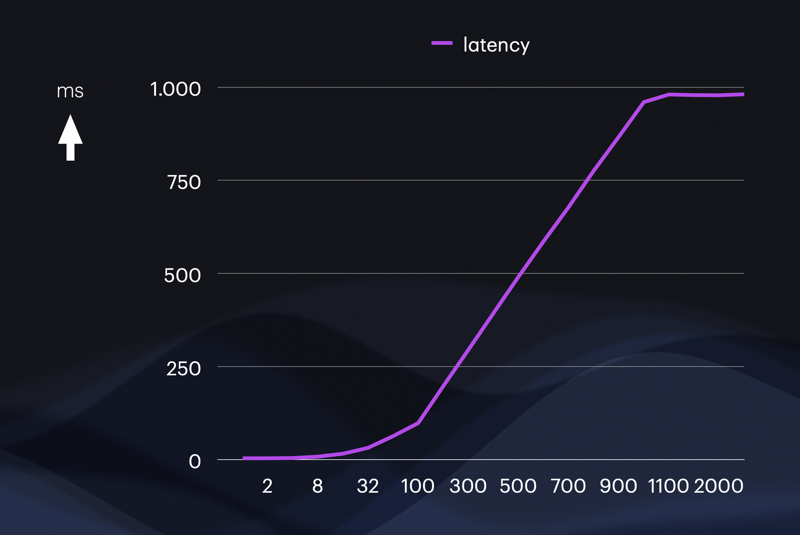

The time for each calculation is about 3.6 ms per core. When we add concurrency slowly, we see a nice linear correlation up until the point where the system with 2 processors + 2 virtual cores is overloaded, at around 510 requests per second. Each request is then handled within 1 second.

We also see that the linearity drops when the timeouts start getting serious.

CPU vs memory bound

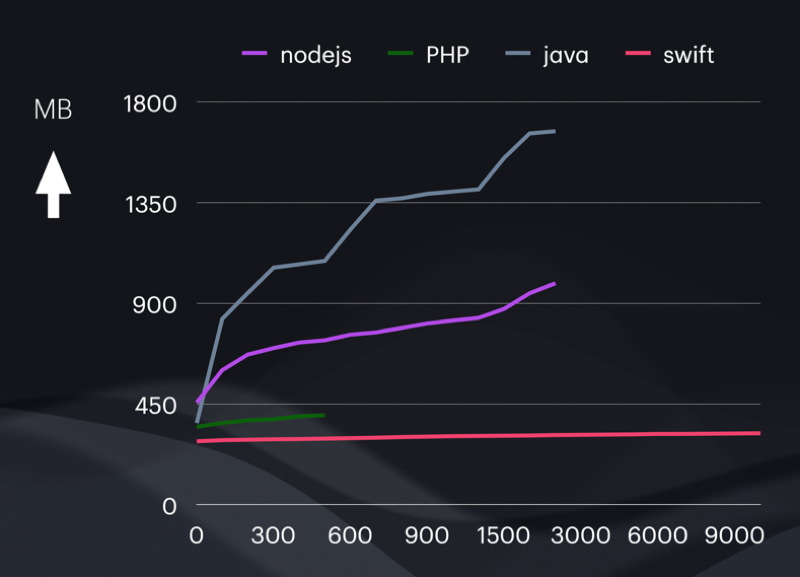

What I can clearly see is that Vapor and swift is very memory efficient. Even when serving 10000 requests, memory increase was perhaps 4-6 MB. In the discussions on the forum this was seen as both an advantage and a disadvantage:

In java and javascript, allocating memory is very fast, so they rapidly consume a lot of memory to increase throughput. Only later does the garbage collector kick in, freeing up memory. However, because wrk keeps hammering the system, it almost never has time. Thus the huge increase in memory usage there.

In swift, memory allocation is more expensive time wise, and you see that in the reduced base throughput. However it still is way more efficient with memory.

Conclusion

I still don’t understand why we don’t see the catastrophic breakdowns we see with the other languages. Vapor drops some requests, but seems to fulfill 98% of requests, or it does something naughty which I cannot put my finger on.

References

Thanks

Thanks to all the wonderful people at the server swift forums